This document shows my experience on following apache document titled “Hadoop:Setting up a Single Node Cluster”[1] which is for Hadoop version 3.0.0-Alpha2 [2].

To make sure that this configuration is successful, just type “ssh localhost”again and you should login without any password asked.

Following steps will make MapReduce jobs run locally. Since in this mode there is single node, working local is relevant.

It is possible to run MapReduce jobs on YARN in pseudo-distributed mode. You need to start ResourceManager and NodeManager daemons after starting HDFS jobs, as explained above.

Document [1] instructs some xml configurations but my experience is that they are not mandatory. For minimum configuration steps, I skip them.

A. Prepare the guest environment

- Install VirtualBox.

- Create a virtual 64 bit Linux machine. Name it “ubuntul_hadoop_master”. Give it 500MB memory. Create a VMDK disc which is dynamically allocated up to 30GB.

- In network settings in first tab you should see Adapter 1 enabled and attached to “NAT”. In second table enable adapter 2 and attach to “Host Only Adaptor”. First adapter is required for internet connection. Second one is required for letting outside connect to a guest service.

- In storage settings, attach a Linux iso file to IDE channel. Use any distribution you like. Because of small installation size, I choose minimal Ubuntu iso [1]. In package selection menu, I only left standard packages selected.

- Login to system.

- Setup JDK.

- Install ssh and pdsh, if not already installed.

$ sudo apt-get install openjdk-8-jdk

$ sudo apt-get install ssh $ sudo apt-get install pdsh

$ echo "ssh" > /etc/pdsh/rcmd_default

B. Install Hadoop

- Download Hadoop distribution 3.0.0-Alpha2.

- Extract archive file.

- Now switch to Hadoop directory and edit file etc/hadoop/hadoop-env.sh Find, uncomment and set JAVA_HOME like below. This will tell Hadoop where java installation is. Be careful here, your java installation folder may be different.

- Try following command to make sure everything is fine.Help messages should be output.

$ wget ftp://ftp.itu.edu.tr/Mirror/Apache/hadoop/common/hadoop-3.0.0-alpha2/hadoop-3.0.0-alpha2.tar.gz

$ tar -xf hadoop-3.0.0-alpha2.tar.gz

export JAVA_HOME=/usr/lib/jvm/java-8-openjdk-amd64/

Per [1], there are three working modes. First mode is standalone mode in which Hadoop runs as a single java process in non-distributed fashion without HDFS or YARN. I won’t try it. You may have look at [1].

C. Pseudo-Distributed Mode

In this mode, Hadoop instances runs in a single node. You need to be able to SSH localhost without entering password. To figure out, simple type “ssh localhost” and It should login without asking any password. Configure passphrases-less SSH by running following commands.

$ ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsa $ cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys $ chmod 0600 ~/.ssh/authorized_keys

To make sure that this configuration is successful, just type “ssh localhost”again and you should login without any password asked.

Following steps will make MapReduce jobs run locally. Since in this mode there is single node, working local is relevant.

- Edit etc/hadoop/core-site.xml file and make sure it has fs.defaultFS property. Document [1] suggests using localhost, but I use IP address of the machine. To find out ip address type “ifconfig”. If you are using VirtualBox with host only network, guest IP will have form like 192.168.56.*.

* Using IP address will ease our job when we clone this virtual machine to set up slave nodes. This way, we won’t need to change fs.defaultFS value at each node.

<configuration> <property> <name>fs.defaultFS</name> <value>hdfs://192.168.56.103:9000</value> </property> </configuration>

- Edit etc/hadoop/hdfs-site.xml file and make sure it has dfs.replication property.

<configuration> <property> <name>dfs.replication</name> <value>1</value> </property> </configuration>

- Format file system. A cluster id can be given as last argument, which is optional.

- It is recommended to start HDFS daemons as a separate user. I create a user named “hdfs” and assign password to it.

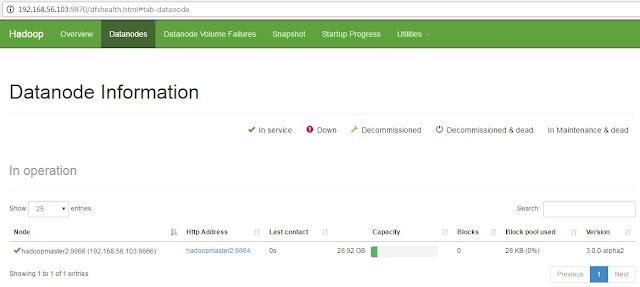

- Start NameNode daemon and DataNode daemon. NameNode daemon is the central daemon thah manages HDFS. DataNode stores the actual data.

- Once HDFS processes are ready, you may run file operations on HDFS.

- You can run MapReduce jobs here.

- To stop HDFS, run following command.

$ bin/hdfs namenode -format

$ useradd hdfs

$ passwd

$ su - hdfs

$ sbin/start-dfs.sh

To make sure that daemons started successfully, type “jps” and you should see DataNode, NameNode and SecondayNameNode listed. If your listing is different, you should check log files inside logs directory to learn what went wrong. If you find file state exception; stop running daemons with stop-dfs.sh, remove Hadoop directories under /tmp directory and re-run the format command at the previous instruction, before trying this command again. Alternatively, instead of utility script start-dfs.sh, you may run HDFS processes separately.

$ bin/hdfs --daemon start namenode $ bin/hdfs --daemon start datanode

$ bin/hdfs dfs -mkdir /tmp $ bin/hdfs dfs -ls /

$ sbin/stop-dfs.sh

Alternatively, you may stop HDFS processes separately.

$ bin/hdfs --daemon stop namenode $ bin/hdfs --daemon stop datanode

D. Running Pseudo-Distributed Mode on YARN

It is possible to run MapReduce jobs on YARN in pseudo-distributed mode. You need to start ResourceManager and NodeManager daemons after starting HDFS jobs, as explained above.

Document [1] instructs some xml configurations but my experience is that they are not mandatory. For minimum configuration steps, I skip them.

- It is recommended to start yarn daemons as a separate user. I create a user named yarn and assign password to it.

- Start NodeManager and ResourceManager daemons.

- You can run MapReduce jobs.

- To stop Hadoop, first shutdown HDFS jobs as explained above. Then stop yarn jobs using the utility script.

$ useradd yarn $ passwd $ su - yarn

$ sbin/start-yarn.sh

Result of JSP command should contain 5 processes now: NameNode, SecondaryNameNode, DataNode, ResourceManager, NodeManager. If there is mission processes check logs and all previous steps.

Alternatively, instead of utility script start-dfs.sh, you may run HDFS processes separately.

$ bin/yarn --daemon start resourcemanager $ bin/yarn --daemon start nodemanager

ResourceManager web interface can be accessed at 8088.

$ sbin/stop-yarn.sh

Alternatively yarn jobs can be stopped separately.

$ bin/yarn --daemon stop resourcemanager $ bin/yarn --daemon stop nodemanager

For completeness yarn commands are listed below. Assume HDFS is formatted.

$ su - hdfs $ sbin/start-dfs.sh $ su – yarn $ sbin/start-yarn.sh Run MapReduce jobs here $ su - hdfs $ sbin/stop-dfs.sh $ su – yarn $ sbin/stop-yarn.sh

Thanks for the informative article. This is one of the best resources I have found in quite some time.

ReplyDeleteHadoop Admin Training in Chennai

Hadoop Administration Course in Chennai

TOEFL Coaching in Chennai

French Classes in Chennai

pearson vue test center in chennai

Informatica Training in Chennai

Hadoop Admin Training in Adyar

Hadoop Admin Training in Velachery

hbar coin hangi borsada

ReplyDeletebtcst coin hangi borsada

vet coin hangi borsada

via coin hangi borsada

tron hangi borsada

juventus coin hangi borsada

beta coin hangi borsada

auto coin hangi borsada

mtl coin hangi borsada

lisans satın al

ReplyDeletenft nasıl alınır

özel ambulans

en son çıkan perde modelleri

uc satın al

minecraft premium

yurtdışı kargo

en son çıkan perde modelleri